How to Benchmark Agentic AI in the SOC: A Practical Guide

Learn how to benchmark agentic AI solutions in your SOC effectively. This guide provides a practical approach to evaluating performance in real-world environments.

.avif)

SOC teams today are under pressure. Alert volumes are overwhelming, investigations are piling up, and teams are short on resources. Many SOCs are forced to suppress detection rules or delay investigations just to keep pace. As the burden grows, agentic AI solutions are gaining traction as a way to reduce manual work, scale expertise, and speed up decision-making.

At the same time, not all solutions deliver the same value. With new tools emerging constantly, security leaders need a way to assess which systems actually improve outcomes. Demos may look impressive, but what matters is how well the system works in real environments, with your team, your tools, and your workflows.

This guide outlines a practical approach to benchmarking agentic AI in the SOC. The goal is to evaluate performance where it counts: in your daily operations, not in a sales environment.

What Agentic AI Means in the SOC

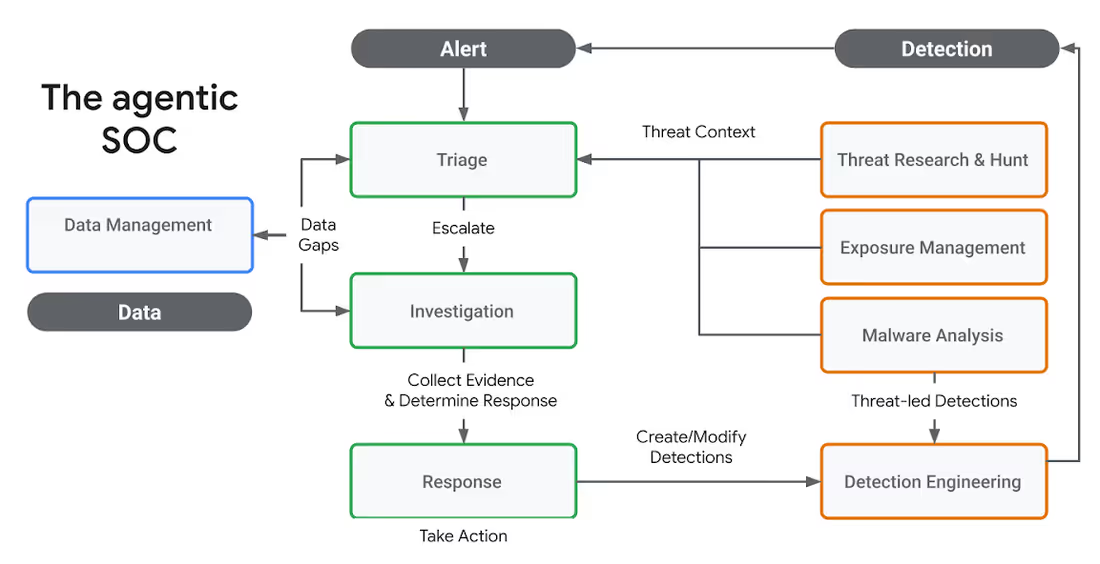

Agentic AI refers to systems that reason and act with autonomy across the investigation process. Rather than following fixed rules or scripts, they are designed to take a goal, such as understanding an alert or verifying a threat, and figure out the steps to achieve it. That includes retrieving evidence, correlating data, assessing risk, and initiating a response.

These systems are built for flexibility. They interpret data, ask questions, and adjust based on what they find. In the SOC, that means helping analysts triage alerts, investigate incidents, and reduce manual effort. But because they adapt to their environment, evaluating them requires more than a checklist. You have to see how they work in context.

1. Start by Understanding Your Own SOC

Before diving into use cases, you need a clear understanding of your current environment. What tools do you rely on? What types of alerts are flooding your queue? Where are your analysts spending most of their time? And just as importantly, where are they truly needed?

Ask:

- What types of alerts do you want to automate?

- How long does it currently take to acknowledge and investigate those alerts?

- Where are your analysts delivering critical value through judgment and expertise?

- Where is their time being drained by manual or repetitive tasks?

- Which tools and systems hold key context or history that investigations rely on?

This understanding helps scope the problem and identify where agentic AI can make the most impact. For example, a user-reported phishing email that follows a predictable structure is a strong candidate for automation.

On the other hand, a suspicious identity-based alert involving cross-cloud access, irregular privileges, and unfamiliar assets may be better suited for manual investigation. These cases require analysts to think creatively, assess multiple possibilities, and make decisions based on a broader organizational context.

Benchmarking is only meaningful when it reflects your reality. Generic tests or template use cases won’t surface the same challenges your team faces daily. Evaluations must mirror your data, your processes, and your decision logic.

Otherwise, you’ll face a painful gap between what the system shows in a demo and what it delivers in production. Your SOC is not a demo environment, and your organization isn’t interchangeable with anyone else’s. You need a system that can operate effectively in your real world, not just in theory but in practice.

2. Build the Benchmark Around Real Use Cases

Once you understand where you need automation and where you don’t, the next step is selecting the right use cases to evaluate. Focus on alert types that occur frequently and drain analyst time. Avoid artificial scenarios that make the system look good but don’t test it meaningfully.

Shape the evaluation around:

- The alerts you want to offload

- The tools already integrated into your environment

- The logic your analysts use to escalate or resolve investigations

If the system can’t navigate your real workflows or access the data that matters, it won’t deliver value even if it performs well in a controlled setting.

3. Understand Where Your Context Lives

Accurate investigations depend on more than just alerts. Critical context often lives in ticketing systems, identity providers, asset inventories, previous incident records, or email gateways.

Your evaluation should examine:

- Which systems store the data your analysts need during an investigation

- Whether the agentic system integrates directly with those systems

- How well it surfaces and applies relevant context at decision points

It’s not enough for a system to be technically integrated. It needs to pull the right context at the right time. Otherwise, workflows may complete, but analysts still need to jump in to validate or fill gaps manually.

4. Keep Analysts in the Loop

Agentic systems are not meant to replace analysts. Their value comes from working alongside humans: surfacing reasoning, offering speed, and allowing feedback that improves performance over time.

Your evaluation should test:

- Whether the system explains what it’s doing and why

- If analysts can give feedback or course-correct

- How easily logic and outcomes can be reviewed or tuned

When it comes to accuracy, two areas matter most:

- False negatives: when real threats are missed or misclassified

- False positives: when harmless activity is escalated unnecessarily

False negatives are a direct risk to the organization. False positives create long-term fatigue.

Critically, you should also evaluate how the system evolves over time. Is it learning from analyst feedback? Is it getting better with repeated exposure to similar cases? A system that doesn’t improve will struggle to generalize and scale across different use cases. Without measurable learning and adaptation, you can’t count on consistent value beyond the initial deployment.

5. Measure Time Saved in the Right Context

Time savings is often used to justify automation, but it only matters when tied to actual analyst workload. Don’t just look at how fast a case is resolved: consider how often that case type occurs and how much effort it typically requires.

To evaluate this, measure:

- How long it takes today to investigate each alert type

- How frequently those alerts happen

- Whether the system fully resolves them or only assists

Use a simple formula to estimate potential impact:

- Time Saved = Alert Volume × MTTR

(where MTTR = MTTA + MTTI)

This provides a grounded view of where automation will drive real efficiency. MTTA (mean time to acknowledge) and MTTI (mean time to investigate) help capture the full response timeline and show how much manual work can be offloaded.

Some alerts are rare but time-consuming. Others are frequent and simple. Prioritize high-volume, moderately complex workflows. These are often the best candidates for automation with meaningful long-term value. Avoid chasing flashy edge cases that won’t significantly impact operational burden.

6. Prioritize Reliability

It doesn’t matter how powerful a system is if it fails regularly or requires constant oversight. Reliability is the foundation of trust, and trust is what drives adoption.

Track:

- How often do workflows complete without breaking

- Whether results are consistent across similar inputs

- How often manual recovery is needed

If analysts don’t trust the output, they won’t use it. And if they constantly have to step in, the system becomes another point of friction, not relief.

Final Thoughts

Agentic AI has the potential to reshape SOC operations. But realizing that potential depends on how well the system performs in your real-world conditions. The strongest solutions adapt to your environment, support your team, and deliver consistent value over time.

When evaluating, focus on:

- Your actual alert types, workflows, and operational goals

- The tools and systems that store the context your team depends on

- Analyst involvement, feedback loops, and decision transparency

- Real time savings tied to the volume and complexity of your alerts

- Reliability and trust in day-to-day performance

The best system is the one that fits — not just in theory, but in the reality of your SOC.

Security leaders often talk about the cost of hiring analysts. Salaries, benefits, training budgets, and a recruiter or two. Those numbers are simple to track, so they tend to dominate planning conversations. The reality inside every SOC is very different. The real costs do not show up neatly in a spreadsheet. They accumulate in the gaps between processes, in the repetitive tasks analysts cannot avoid, and in the institutional drag created when people burn out or walk out the door.

Most SOCs are not struggling with a talent shortage. They are struggling with talent waste. Skilled people spend too much time on work that is beneath their capabilities. The hard truth is that this is a design problem, not a staffing problem. Until SOCs address it head-on, the cycle repeats itself: more hiring, more turnover, more loss of knowledge, more missed opportunities.

This is the part of the SOC budget most leaders still underestimate.

The Real Cost of Hiring and Ramp-Up

Hiring an analyst feels like progress. It also comes with costs that rarely get accounted for. The first few months of a new hire can be more expensive than the hire itself. Senior analysts are pulled away from active investigations to train newcomers. Work slows down. Processes become inconsistent.

One customer summarized the issue clearly: “Most of our onboarding time goes into walking new analysts through the same basic steps. If we could guide them through those workflows with Legion, our team could focus their time on real investigations.”

When experienced analysts spend their days teaching repetitive steps instead of improving detection quality or strengthening defenses, the SOC loses far more than money. It loses momentum. And momentum is what allows a team to stay ahead of attackers.

Repetitive, Boring Work Creates Predictable Burnout

Tier 1 and Tier 2 analysts often do not quit because the mission is uninspiring. They quit because the tasks are. Every SOC leader knows this, but very few have solved it. The daily flood of low complexity alerts, routine enrichment steps, and copy-and-paste investigations grinds people down.

Burnout is not a mystery. It is the predictable result of asking talented people to repeat the same low-value tasks.

When people leave, you lose more than a seat. You lose context, intuition, and the fundamental knowledge that comes from long-term exposure to your environment. Hiring someone new does not replace that.

The Opportunity Cost That Quietly Slows Every SOC

In many SOCs, highly skilled analysts spend their time on tasks that could have been automated five years ago. This is the least visible and most expensive form of waste. It does not show up as a line item in the budget. It shows up in everything the team is not doing.

A customer of ours captured the thinking many teams share:

When analysts are busy with manual steps, they are not threat hunting, tuning detection rules, studying new adversary behaviors, or improving processes.

This is how SOCs fall behind. Not because the analysts are incapable, but because their time is misallocated. Attackers innovate faster than teams can adjust. That gap widens when analysts are stuck doing repetitive tasks rather than strategic work.

A Better Path: Give Analysts the Power to Automate Their Own Work

SOCs have tried to fix these problems by hiring more people. That has not worked. They have tried building automation through security engineering teams. That added new bottlenecks. They have tried to hire outsourced help, it created inconsistency, while decreasing visibility.

What works, and what the most forward-thinking SOCs are now adopting, is a different approach. Automation belongs with the analysts, not with developers or specialized engineers.

One analyst put it simply: “We are bringing the ability to automate to the analyst. It is about self-empowerment.”

When analysts can automate the steps they repeat every day, they stop depending on engineering cycles. They stop waiting for API integrations. They no longer need someone with Python skills to script the basics.

This shift changes the entire rhythm of the SOC.

The Role of AI SOC in Quality and Consistency

For years, automation required an engineering mindset. Tools demanded scripting, manual API work, and knowledge of multiple integrations. Analysts were forced to rely on others. As a result, automation never became widespread.

That reality is changing. Browser-based tools like Legion can now capture workflows directly from the analyst’s actions. No API configuration. No scripts. No custom requests. Analysts can drag and drop steps, adjust logic, or describe edits in natural language.

A customer of ours said it plainly:

This matters because it removes the old automation bottleneck. It lets analysts fix their own inefficiencies as soon as they see them.

Turning Senior Expertise into a Force Multiplier

A SOC becomes stronger when its best analysts teach others how they think. Historically, this type of knowledge transfer was slow and informal. New hires watched over shoulders. Senior staff answered endless questions. Training varied widely depending on who happened to be available.

Now teams record their own best work and turn it into reusable, repeatable workflows.

One analyst described the shift: “Senior people record their workflows and junior people run them. You share expertise and bring everyone to the level of your top people.”

Another added: “It is a useful training tool because junior folks can see what the investigation looks like and understand the decision-making in each step.”

This approach does more than speed up onboarding. It locks valuable expertise into the system so it can be reused at any time.

Real Results: More Output With the Team You Already Have

When repetitive work is automated, analysts suddenly have time. This is where the economic impact becomes impossible to ignore.

One organization measured the difference:

Another organization brought an entire outsourced SOC back in-house. Their automation results gave them enough capacity and quality improvements to cancel a seven-figure managed services contract. The CISO wanted consistent quality. The SOC manager wanted efficiency. Legion delivered both.

The manager became the hero of the story because he did not ask for more people. He made better use of the ones he already had.

Where to Begin If You Want to Reduce These Hidden Costs

You do not need a complete transformation plan to get started. Most SOCs can begin reducing waste immediately by focusing on a few straightforward steps.

1. Identify high-frequency workflows: Look for anything repetitive, especially tasks that happen dozens of times per day.

2. Ask analysts to document their steps: This becomes the foundation for automation and reveals inconsistencies. We do this at Legion through a simple recording process.

3. Build automation for the repetitive use cases: Let analysts automate on their own without developers. This creates speed and value for repetitive work.

4. Track real metrics: MTTI/MTTR, MTTA (Acknowledgement), onboarding time (a time to value metric), and workflow usage

5. Encourage a culture of sharing: When people share workflows, the entire team improves faster. There are almost always steps that differ between analysts.

Small shifts compound quickly. Capacity increases. Quality rises. Analysts feel more ownership and less drain.

The SOC of the Future Makes Better Use of Human Talent

The SOCs that succeed over the next decade will not be the ones that hire the most people. They will be the ones who make the smartest use of the people they already have.

When you eliminate the hidden costs, you unlock the real value of your team. Human judgment, intuition, and creativity become the focus again. That is the work analysts want to do. And it is the work that actually strengthens your defenses.

Most SOCs are not struggling with a talent shortage. They are struggling with talent waste. Learn how Legion is helping enterprises solve the SOC talent management crisis.

At Legion, we spend as much time thinking about how we build as we do about what we build. Our engineering culture shapes every decision, every feature, and every customer interaction.

This isn’t a manifesto or a slide in a company deck. It’s a candid look at how our team actually works today, what we care about, and the kind of engineers who tend to thrive here.

We build around four core ideas: Trust, Speed, Customer Obsession, and Curiosity. The rest flows from there.

1. High Ownership, Zero Silos

The foundation of engineering at Legion is simple: we trust you, and you own what you build.

We don’t treat engineering like an assembly line. Every engineer here runs the full loop:

- Shaping the problem and the solution

- Designing and implementing backend, frontend, and AI pieces

- Getting features into production

- Watching how customers actually use what you shipped

That level of ownership creates accountability, but it also creates pride. You see the full impact of your work.

However, ownership doesn’t mean you’re on your own. We don’t build in silos. We are a team that constantly supports each other, whether that’s brainstorming a solution, helping a teammate get unblocked, or just acting as a sounding board.

Part of owning your work is bringing the team along with you. It means communicating your plan and ensuring everyone is aligned on how your work fits into the bigger picture. Collaboration isn't just a process here; it's how we succeed. You own the outcome, but you have the whole team behind you.

Trust is what makes this possible. We don’t track hours or measure success by time spent at a desk. People have kids, partners, lives, good days, and off days. What matters is that we deliver great work and move the product forward. How you organize your time to do that is up to you.

2. Speed Wins (And Responsiveness Matters)

We care a lot about speed, but not the chaotic, “everything is a fire drill” version.

Speed for us means short feedback loops, small and frequent releases, and fixing issues quickly when they appear.

When a customer hits a bug or something breaks, that becomes our priority. We stop, understand the problem, fix it, and close the loop. A quick, thoughtful fix often does more to build trust than a big new feature.

On the feature side, we favor progress over perfection. We’d rather ship a smaller version this week, watch how customers react, and iterate, rather than spend months polishing something in isolation.

Speed doesn’t mean cutting corners. It means learning fast and moving forward with intention. If you like seeing your work in production quickly, and you’re comfortable with the responsibility that comes with that, you’ll fit in well.

3. Customer-Obsessed: Building What They Actually Need

It’s easy for engineering teams to get lost in the code and forget the human on the other side of the screen. We fight hard against that.

We are obsessed with building features that genuinely help our customers, not just features that are fun to code. To do that, we stay close to them. We make a point of hearing directly from users, not just to fix bugs, but to understand the reality of their work and what they truly need to make it easier.

That direct connection builds empathy. It helps us understand why we are building a feature, not just how to implement it. This ensures we don’t waste cycles building things nobody wants. When you understand the core problem, you build a better product, one that delivers real value from day one.

4. Curiosity: We Build for What’s Next

AI is at the center of everything we do at Legion, and that means working in a landscape that changes every week.

We can’t afford to be comfortable with the tools we used last year. We look for engineers who are genuinely curious, the kind of people who play with new models just to see what they can do.

We proactively invest time in emerging technology, knowing that early experimentation is how we define the next industry standard. If you prefer a job where the tech stack never changes, and the roadmap is set in stone for 18 months, you probably won’t enjoy it here. But if you love the chaos of innovation and figuring out how to apply new tech to real security problems, you’ll fit right in.

So, is this for you?

Ultimately, we are trying to build the kind of team we’d want to work in ourselves.

It’s an environment that tries to balance the energy of collaboration in our Tel Aviv office with the quiet focus needed for deep work at home. We try to keep things simple: we are candid with each other, and we value getting our hands dirty over managing processes.

If you want to be part of a team where you are trusted to own your work and move fast, come talk to us. Let’s build something great together.

VP of R&D Michael Gladishev breaks down how the team works, why curiosity drives everything, and what kind of engineers thrive in a zero-ego, high-ownership environment.

The first publicly documented, large-scale AI-orchestrated cyber-espionage campaign is now out in the open. Anthropic disclosed that threat actors (assessed with high confidence as a Chinese state-sponsored group) misused Claude Code to run the bulk of an intrusion targeting roughly 30 global organizations across tech, finance, chemical manufacturing, and government.

This attack should serve as a wake-up call, not because of what it is, but because of what it enables. The attackers used written scripts and known vulnerabilities, with AI primarily acting as an orchestration and reconnaissance layer; a "script kiddy" rather than a fully autonomous hacker. This is just the start.

In the near future, the capabilities demonstrated here will rapidly accelerate. We can expect to see actual malware that writes itself, finds and exploits vulnerabilities on the fly, and evades defenses in smart, adaptive ways. This shift means that the assumptions guiding SOC teams are changing.

What Actually Happened: The Technical Anatomy

The most critical takeaway from this campaign is not the technology used, but the level of trust the attackers placed in the AI. By trusting the model to carry out complex, multi-stage operations without human intervention, they unlocked significant, scalable capabilities far beyond human tempo.

1. Attackers “Jailbroke” the Model

Claude’s safeguards weren’t broken with a single jailbreak prompt. The actors decomposed malicious tasks into small, plausible “red-team testing” requests. The model believed it was legitimately supporting a pentest workflow. This matters because it shows that attackers don’t need to “break” an LLM. They just need to redirect its context and trust it to complete the mission.

2. AI Performed the Operational Heavy Lifting

The attackers trusted Claude Code to execute the campaign in an agentic chain autonomously:

- Scanning for exposed surfaces

- Enumerating systems and sensitive databases

- Writing and iterating exploit code

- Harvesting credentials and moving laterally

- Packaging and exfiltrating data

Humans stepped in only at a few critical junctures, mainly to validate targets, approve next steps, or correct the agent when it hallucinated. The bulk of the execution was delegated, demonstrating the attackers’ trust in the AI’s consistency and thoroughness.

3. Scale and Tempo Were Beyond Human Patterns

The agent fired thousands of requests. Traditional SOC playbooks and anomaly models assume slower human-driven actions, distinct operator fingerprints, and pauses due to errors or tool switching. Agentic AI has none of those constraints. The campaign demonstrated a tempo and scale that is only possible when the human operator takes a massive step back and trusts the machine to work at machine speed.

4. Anthropic Detected It and Shut It Down

Anthropic’s logs flagged abnormal usage patterns, disabled accounts, alerted impacted organizations, worked with governments, and released a technical breakdown of how the AI was misused.

The Defender’s Mandate: Adopt and Trust Defensive AI

Attackers have already made the mental pivot, treating AI as a trusted, high-velocity force multiplier for offense. Defenders must meet this shift head-on. If you don't adopt defensive AI, you are falling behind adversaries who already have.

Defenders must further adopt AI and trust it to carry out workflows where it has a decisive advantage: consistency, thoroughness, speed, and scale.

1. Attack Velocity Requires Machine Speed Defense

When an agent can operate at 50–200x human tempo, your detection assumptions rot fast. SOC teams need to treat AI-driven intrusion patterns as high-frequency anomalies, not human-like sequences.

2. Trust AI for High-Volume, Deterministic Workflows

Existing detection pipelines tuned on human patterns will miss sub-second sequential operations, machine-generated payload variants, and coordinated micro-actions. Agentic workloads look more like automation platforms than human operators.

Defenders need to accept the uncomfortable reality that manual triage for these types of intrusions is pointless. You need systems that can sift through massive alert loads, isolate and contain suspicious agentic behavior as it unfolds.

This is where the defense’s trust must be applied. Only the genuinely complex cases should ever reach a human. The SOC must delegate and trust AI to handle triage, investigation, and response with machine-like consistency.

3. “AI vs. AI” is No Longer Theoretical

Attackers have already made the mental pivot: AI is a force multiplier for offense today. Defenders need to accept the same reality. And Anthropic said this out loud in their conclusion:

“We advise security teams to experiment with applying AI for defense in areas like SOC automation, threat detection, vulnerability assessment, and incident response.”

That’s the part most vendors avoid saying publicly, because it commits them to a position. If you don’t adopt defensive AI, you’re falling behind adversaries who already have.

Where SOC Teams Should Act Now

Build Detection for Agentic Behaviors

Start by strengthening detection around behaviors that simply don’t look human. Agentic intrusions move at a pace and rhythm that operators can’t match: rapid-fire request chains, automated tool-hopping, endless exploit-generation loops, and bursty enumeration that sweeps entire environments in seconds. Even lateral movement takes on a mechanical cadence with no hesitation.

These patterns stand out once you train your systems to look for them, but they won’t surface through traditional detection tuned for human adversaries.

Make AI a Core Strategy, Not an Experiment

Start thinking of adopting AI to fight specific offensive AI use cases, whilst keeping your human SOC on its routine.

Defenders have to meet this shift head-on and start using AI against the very tactics it enables. The volume and velocity of these intrusions make manual triage pointless.

You need systems that can sift through massive alert loads, isolate and contain suspicious agentic behavior as it unfolds, generate and evaluate countermeasures on the fly, and digest massive log streams without slowing down. Only the genuinely complex cases should ever reach a human. This isn’t aspirational thinking; attackers have already proven the model works.

Key Takeaway

For SOC teams, the takeaway is that defense has to evolve at the same pace as offense. That means treating AI as a core operational capability inside the SOC, not an experiment or a novelty.

The Defender’s AI Mandate: Trust AI to handle tasks where it excels: consistency, thoroughness, and scale.

The Defender’s AI Goal: Delegate volume and noise to defensive AI agents, freeing human analysts to focus only on genuinely complex, high-confidence threats that require strategic human judgment.

Legion Security will continue publishing analysis, defensive patterns, and applied research in this space. If you want a deeper dive into detection signatures or how to operationalize defensive AI safely, just say the word.

The Anthropic AI espionage case proves attackers trust autonomous agents. To counter machine-speed threats, SOCs must adopt and trust AI to handle 90% of the defense workload.